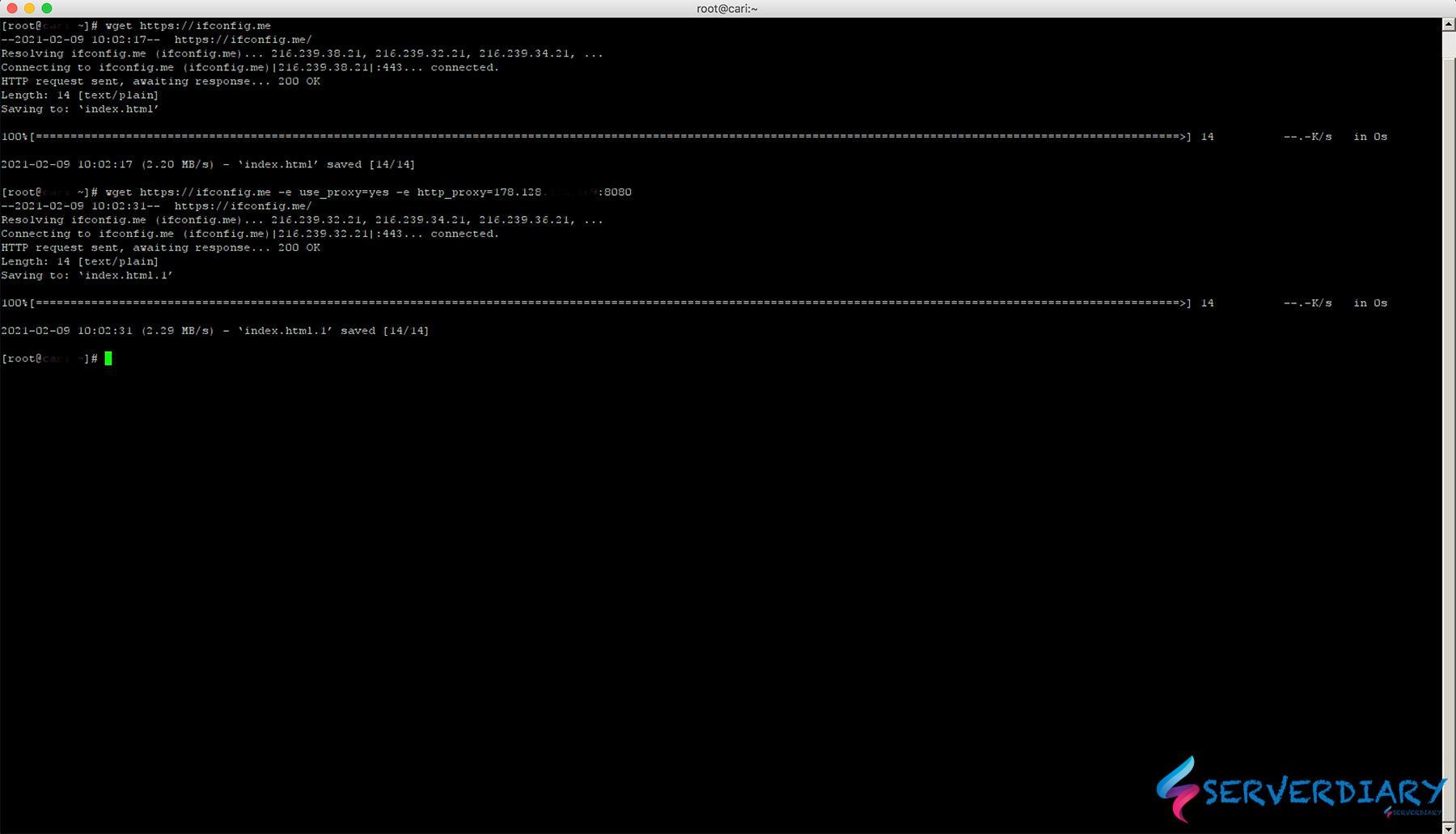

Use the -debug option to see what header information wget sends with each request: $ wget -debug When you browse a website, your browser sends HTTP request headers. HTTP headers are components of the initial portion of data. Protocols used for data exchange have a lot of metadata embedded in the packets computers send to communicate.

WGET HTTPS ARCHIVE

If you're using wget to archive a site, then the options -no-cookies -page-requisites -convert-links are also useful to ensure that every page is fresh, complete, and that the site copy is more or less self-contained. Depending on how old the website is, that could mean you're getting a lot more content than you realize. This option is the same as running -recursive -level inf -timestamping -no-remove-listing, which means it's infinitely recursive, so you're getting everything on the domain you specify.

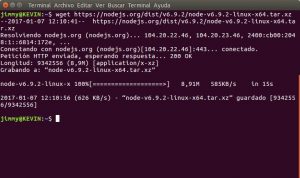

WGET HTTPS DOWNLOAD

You can download an entire site, including its directory structure, using the -mirror option. Assuming you know the location and filename pattern of the files you want to download, you can use Bash syntax to specify the start and end points between a range of integers to represent a sequence of filenames: $ wget. If it's not one big file but several files that you need to download, wget can help you with that. $ wget -continue Download a sequence of files

WGET HTTPS ISO

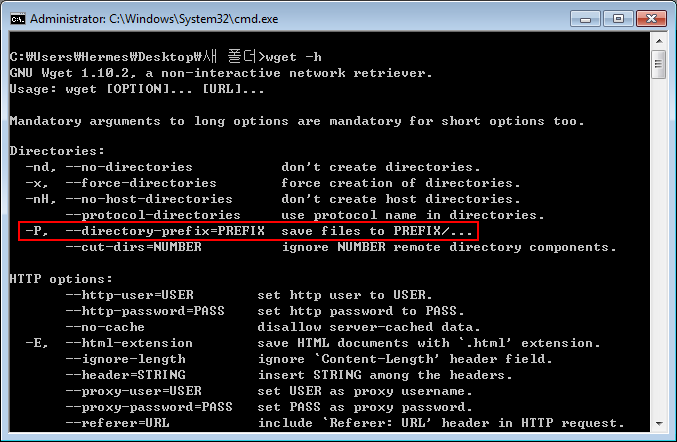

That means the next time you download a 4 GB Linux distribution ISO you don't ever have to go back to the start when something goes wrong. With the -continue ( -c for short), wget can determine where the download left off and continue the file transfer. If you're downloading a very large file, you might find that you have to interrupt the download. You can use the -output-document option ( -O for short) to name your download whatever you want: $ wget -output-document foo.html Continue a partial download You can make wget send the data to standard out ( stdout) instead by using the -output-document with a dash - character: $ wget -output-document - | head -n4 By default, the file is downloaded into a file of the same name in your current working directory.

If you provide a URL that defaults to index.html, then the index page gets downloaded. You can download a file with wget by providing a link to a specific URL. The wget utility is designed to be non-interactive, meaning you can script or schedule wget to download files whether you're at your computer or not. This is literally also what web browsers do, such as Firefox or Chromium, except by default, they render the information in a graphical window and usually require a user to be actively controlling them. It gets data from the Internet and saves it to a file or displays it in your terminal. Wget is a free utility to download files from the web. You can SSH into the Kudu Container via: Post questions | Provide product feedbackĬategories. The Kudu container is used for build purpose and has tcpping pre-installed. In certain scenarios where the WebApp/container is unable to start or SSH is not configured in the Custom Docker Image, you could use tcpping tool from the Kudu Container. (3/3) Installing tcptraceroute (1.5b7-r2)

WGET HTTPS APK

home is your application's persistent storage and is sħ9eaacc1cd21:/home# apk add tcptraceroute **NOTE**: No files or system changes outside of /home will persist beyond your application's current session.

If you receive an error “ cannot find bc.Go to your Kudu site (i.e to SSH into your app.x repeat n times (defaults to unlimited)

r repeat every n seconds (defaults to 1) C print in the same format as fping's -C option Tcptraceroute is already the newest version.clearĠ upgraded, 0 newly installed, 0 to remove and 0 not cd wget Ĭonverted '' (ANSI_X3.4-1968) -> '' (UTF-8)

0 kommentar(er)

0 kommentar(er)